Providing Resources

This page covers the basic concepts involved in creating a Merge site, full documentation is available in the testbed operations docs.

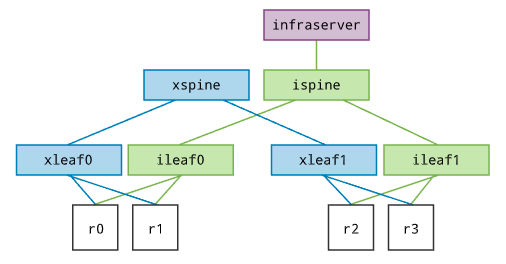

Testbed Model

Providing resources as a Merge testbed site starts by creating a model. In this example we're going to model a simple 4 node testbed that has physically disjoint experiment and infrastructure networks.

The Golang implementation Merge XIR SDK contains a layered set of libraries for describing testbed sites. From the bottom up, these layers are

| Library | Description |

|---|---|

| hw | Model the hardware that comprises the testbed in detail. |

| sys | Model operating system and network level configurations in terms of hardware components. |

| tb | Model testbed resources in terms of system level components. Testbed resources include additional properties such as allocation mode and testbed role that determine how the Merge testbed software manages these resources. |

The code below describes the testbed topology depicted in the diagram above.

Key Modeling Concepts

Resources

Network testbeds are made up of interconnected nodes. In the model above we define these nodes as Resources using the testbed modeling library. Filling in relevant details such as name, MAC addresses and tags that link to provisional resources.

This example abstracts away specific hardware details about the resources in an effort to create a simple presentation. However, the Merge hardware modeling library provides extensive support for modeling resources in great detail. Consider the following example of modeling a network emulation server taken from a current Merge testbed facility

Cables

Cables can be deceptively complex. From optical breakouts, with differing polarity configurations, to multi-lane DAC trunks and a dizzying array of small pluggable form factor transceivers for both copper and optics that support a complex matrix of protocol stacks testbed cabling can get very complicated in a hurry.

The cable modeling library provides support for capturing all the details about cables that are needed to operate a testbed facility. This library grows rather organically based on the needs of facility operators. Contributions to this and all of the XIR libraries are more than welcome.

Provisional Resource Properties

Provisional resource properties are properties of resources that can be provisioned by the testbed facility. A common example is operating system images. OS images are not an intrinsic property of a particular resource, they are provisioned by the testbed facility. Typically a testbed facility will have a range of resources and a range of OS images and a compatibility matrix that provides a relationship between the two.

Supporting provisional resources in a testbed facility is done in two parts. First, there is a provisional resource property map that is a top level property of the testbed model. This is a multi level property map where:

The first level is a key defined by a property tag. In the example above we used

*as all the nodes in our example reference this property set. However, there could be a more specific property set that only applies to a specific type of hardware. For example theminnowkey could provide a set of provisional properties for MinnowBoard embedded computers in a testbed facility.Below the first level is a generic dictionary of properties keyed by string. As the example above shows, the

imageproperty maps onto an array of strings that define the available images. When users create models with OS image requirements, theimageproperty map will be used to determine if the nodes in this testbed satisfy the experimental requirements of the user.

The second part is in the provisional tags carried by each resource. In the

example above, the provisional tags were supplied as the final argument to the

SmallServer, MediumServer and LargeServer respectively. The provisional

tags, typically referred to as ptags bind a resource to a provisional property

set of the testbed facility.

Deployment

Once the testbed model has been created, it can be used to generate configurations for every component in the testbed.

Deployment of a Merge testbed site includes the following steps.

- Deploying the testbed XIR model to infrapod nodes.

- Installing the Merge commander on infrapod nodes.

- Installing the Cogs automation system on infrapod nodes.

- Installing Canopy on switches and provisioning the base Merge configuration.

- Setting up nodes to network boot if needed.

Each of these steps is outlined in more detail below.

Testbed model deployment

The testbed XIR model (the JSON generated from our program above) must be

installed at the path /etc/cogs/tb-xir.json at each infrapod server. Once the

testbed automation software is installed on these nodes, it will use the testbed

model to perform materialization operations.

Installing the Merge commander

The Merge commander is responsible for receiving materialization commands from a Merge portal and forwarding them to the appropriate driver. In this example, the driver is a part of the Cogs automation software and there is only one infrapod. In more sophisticated setups the commander may be run on a dedicated node and load balance requests to a replicated set of drivers running across a set of infraserver nodes.

The Merge commander is available as a Debian package from the Merge package server and can be installed as follows.

All communication between the Merge portal and a Merge commander is encrypted

and require TLS client authentication. This means for each commander, a

certificate TLS certificate must be generated and provided to the portal. This

cert must be placed at /etc/merge/cmdr.pem on all servers running the

commander daemon. Documentation on generating certificates can be found

here.